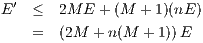

| (1) |

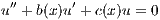

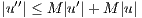

on the interval [0,A] and that the coefficients b(x) and c(x) are both bounded, say |b(x)|≤ M and |c(x)|≤ M (if the coefficients are continuous, this is always true for some M).

- a)

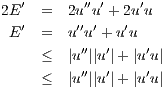

- Define E(x) :=

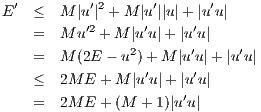

(u′2 + u2). Show that for some constant γ (depending on M) we have E′(x) ≤ γE(x).

[Suggestion; use the inequality 2xy ≤ x2 + y2.]

(u′2 + u2). Show that for some constant γ (depending on M) we have E′(x) ≤ γE(x).

[Suggestion; use the inequality 2xy ≤ x2 + y2.]

- b)

- Use Problem 3(c) above to show that E(x) ≤ eγxE(0) for all x ∈ [0,A].

- c)

- In particular, if u(0) = 0 and u′(0) = 0, show that E(x) = 0 and hence u(x) = 0 for all x ∈ [0,A]. In other words, if u′′ + b(x)u′ + c(x)u = 0 on the interval [0,A] and that the functions b(x) and c(x) are both bounded, and if u(0) = 0 and u′(0) - 0, then the only possibility is that u(x) ≡ 0 for all x ≥ 0.

- d)

- Use this to prove the uniqueness theorem : if v(x) and w(x) both satisfy equation (1) and have the same initial conditions, v(0) = w(0) and v′(0) = w′(0), then v(x) ≡ w(x) in the interval [0,A].

(

(

(

(